UPDATE: This post has been updated to reflect new information learned in collaboration with the AWS S3 Replication, CloudTrail and Security Teams.

A comprehensive backup strategy is a cornerstone of any DR plan. But how would you distinguish between legitimate backup activity and malicious data exfiltration?

Cyber attackers are increasingly gaining access to cloud control planes, which include capabilities to perform cloud-native backups. Here, I’ll show how these tools can be leveraged to exfiltrate data from across an organization’s production environment.

In this blog, you'll look closely at how an attacker can abuse S3 Replication to efficiently migrate your data out of your environment. I hope you find it as entertaining to read as it was to create.

You will see this attack played out in separate narrative arcs from the perspective of four different actors listed here.

- The S3 Replication Service, as it benignly follows orders dictated as replication rules to replicate S3 data across buckets.

- The Evil S3 Replication Service, as its powers are abused to copy data to external locations.

- The attacker who gains controls of the S3 Replication Service, coopting the service for evil and using its lack of logging to stay under the radar.

- Members of the SOC team (security operations centers) who learn that they are partially blind to data movement via the S3 Replication Service but can now change their detection strategy to compensate.

Introducing the S3 Replication Service, its capabilities and use cases for good

The AWS S3 Service is no longer the 'Simple Storage Service' it was made out to be. With dozens of features and integrations, it has become the data store of choice for enterprise AWS customers. It’s also so complicated that it is difficult to understand and thus secure all its capabilities. One of S3's numerous features is the capability to copy data across regions and accounts creating active backups of your data.

As you see in this post, this feature is ripe for abuse and can be challenging to gain visibility into.

The Replication Service in AWS does exactly what you might think, when directed with rules, it will copy S3 data between buckets.

The service takes marching orders from Replication Rules. You can configure Rules to tell the service to copy data to multiple buckets, creating multiple replicas of the same source object.

The destination buckets don’t even have to be in the same region or the same account as the source bucket.

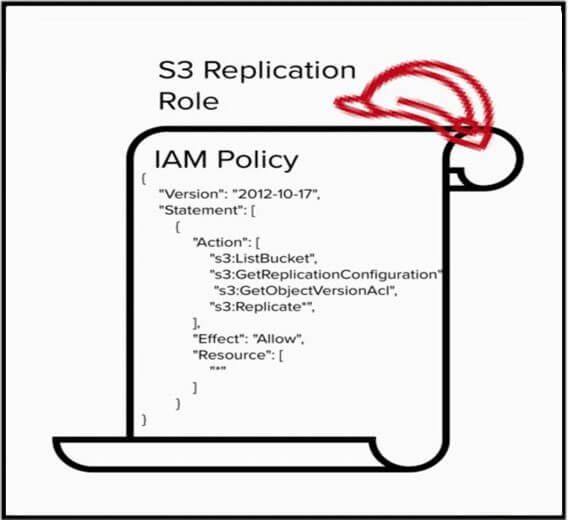

Its data replicating capabilities are only possible if the Service is granted permissions to do so. To enable S3 Replication Service, you configure it to assume an IAM Role which be granted IAM permissions to access both the source and destination buckets.

What are the consequences if an attacker gains the ability to use S3 Replication in AWS?

Cross-account replication can assist organizations in recovery from a data-loss event. In the wrong hands, however, the replication service allows threat actors to siphon off data to untrusted locations.

The S3 Replication Service has high abuse potential and as a result, is a prime target for attackers.

If an attacker were to gain the ability to create Replication Rules, they could direct the S3 Replication Service to copy data to their attacker-controlled, external bucket. Even one that resides outside of the victims AWS Organization.

What IAM permissions are required to replicate externally?

The S3 Replication Service requires permissions on S3 objects to both get the object from the source bucket and replicate the object to the external, attacker-controlled bucket.

Here is an example of an IAM Policy which defines the required actions needed for replication but neglects to scope the permissions to a resource, leaving the resource field as ”*”. This policy is so permissive, it would allow the S3 Replication Service to copy objects to any bucket, even those outside of your account.

Given an overly permissive policy, an attacker would need the ability to update replicate rules, directing the Replication Service to copy data to an attacker-controlled bucket. No permissions are required on the objects themselves, just the ability to update rules.

To recap – instead of directly copying or moving data out of an account, the S3 Replication Service can be leveraged by an attacker to perform those same actions on their behalf, as a result of a malicious replication rule. This is not a bug which can be remediated rather it’s a type of cloud vulnerability called ‘feature abuse’.

How does the S3 Replication Service log its activities? And how does that aid an attacker in going unnoticed?

The authorized movement of data via the S3 Replication Service is less than transparent making it especially difficult to hunt for data exfiltration, enabling an attacker to hide their activity in plain sight within your cloud environment.

Let’s look at when and where the Replication Service writes events to CloudTrail based on your data-plane trail configurations bucket scoping.

In order to gain visibility into events affecting S3 objects, you need to opt into the collection of S3 data events. The scope of these events can be configured to include ’All current and future S3 buckets’ or individual buckets can be enumerated. This CloudTrail setting serves as a filtering mechanism to scope-in specific buckets into S3 data-plane logging.

When data is copied by the S3 Replication Service a GetObject event is incurred as the service grabs the object when replicating. This event is written in the Source Account CloudTrail.

Following the GetObject event, the PutObject event, revealing the destination bucket, is recorded in both the External Destination Account and in the Source Account.

What is the behavior of CloudTrail S3 data-plane logging if logs are limited to specific buckets?

To control costs, organizations will often enable S3 data-plane logs on their high-value buckets only rather than paying for logging on ’all current and future buckets ‘. How does this logging configuration change the visibility of the S3 Replication Service?

Following the GetObject event, a PutObject event could be recorded in the Destination Account in accordance with the Destination Account CloudTrail configuration. Notably, no PutObject event will be recorded in the source account.

What this means in practice is, as data is copied by the Replication Service, there is no record in the CloudTrail of the Source Account revealing the external destination bucket.

When CloudTrail is configured to capture S3 data-plane events from specific Source Account buckets, a gap in logging exists, allowing for the copying of data without recording the data-plane event, PutObject, in the Source Account.

Without data-planes logs scoped to include logs from all current and future buckets, an attacker could update replication rules to replica objects to their external bucket - sit back and relax as the Replication Service silently moves data out of an organization.

Fortunately, the preventative controls are clear.

As always, when defining AWS IAM identity policies, do not leave the resource field blank allowing the policy permissions to apply to any policy. Ensure the IAM Role the Replication Service assumes explicitly enumerates which buckets it's allowed to operate on.

What are the consequences for cloud defenders?

Theat hunters can use the malicious updating of replication rules as a clue that data exfiltration may silently be occurring in their environment.

Given how preventable service abuse is with the S3 Replication Service and why does this lack of visibility matter to the threat hunters and cloud defenders of the world?

To see why these logging inconsistencies matter, we need to discuss the defender's mission in an organization. Cloud defenders are continually asking questions about the cloud environment in search of indicators of compromise. Their job is to detect when things go sideways – despite the best efforts of preventive measures.

A defender's bread and butter are logs for which they use to craft detections to provide early warning signs that data is moving from the perimeter. The data-plane logs available to defenders may be constrained to only known, high-value buckets to address cost concerns and reduce the sheer volume of data-plane logs which can be generated when capturing all.

If data can be copied without incurring a recording of the destination bucket, defenders can’t comprehensively alert on or hunt for the exfiltration of data by looking for bad buckets in data-plane events such as CopyObject or PutObject events.

Defenders need to broaden the events they monitor to include the updating of replication rules so they can ensure they are comprehensively monitoring their data perimeter.

If a SOC is unable to enforce S3 data-plane logging on ’all current and future buckets, monitoring for and alerting on the PutBucketReplication event is the only place that a defender will have visibility into external destination buckets. This event doesn’t indicate data has been copied, only that the Replication service has a rule configured to copy data.

Lessons learned when abusing the Replication Service

The S3 Replication Service can be used to help organizations become more ransomware resilient but it’s important when building out our threat model to craft evil user stories to help us view cloud capabilities from the viewpoint of an attacker.

Due to the cost, a typical organization might be hesitant to enable S3 data-plane logging on all buckets in an account preferring to selectively capture logs only on high-value buckets. As we’ve seen, that strategy will result in a gap in S3 exfiltration visibility since the PutObject event will not be written in the Source Account.

As of August 2022, AWS had declined to fix the logging issue with the S3 Replication service. In discussing this with AWS, the following solutions or ‘feature requests’ have been proposed.

- Include the destination bucket in the GetObject event

- Preferably, consider the GetObject and PutObject events as pairs - writing these two events to the Source Account as a result of the scoping filter of the source bucket rather than considering the data-plane log scope of the destination bucket.

Reporting Timeline

10/19/21

[Vectra]: Initial vulnerability report submitted, and receipt acknowledged same day.

10/20/21

[AWS]: Responded with their indication that they do not believe this to be a vulnerability:

“We do not believe the behavior you describe in this report presents a security concern, rather, it is expected behavior. We offer CloudWatch alarming that will log any changes made to a bucket's replication policy [1], which would give visibility into the destination of the data for the threat model described. See the section detailing "S3BucketChangesAlarm".

10/20/21

[Vectra]: Requested confirmation that AWS does not consider the lack of logging a vulnerability. Indicated that I will depict AWS response to this report as ‘won’t fix’ in any public disclosure.

10/26/21

[AWS]: AWS asked where I would publish the disclosure and if they could have an advance copy of the text.

10/26/21

[Vectra]: Acknowledged they could receive an advance copy of any public disclosure.

2/2/22

[Vectra]: Re-sent the original vulnerability report to an internal contact on the AWS Security team suggesting they put in a request for enhanced logging on the S3 replication service.

2/3/22

[AWS]: Acknowledged they put in the ticket internally.

7/20/22

[Vectra]: Published initial research on the subject which described logging issue as only occurring when Replication Time Control (RTC) was enabled.

7/25/22

[Vectra]: Re-tested finds to discover a lack of logging occurring with or without RTC enabled.

7/26/22

[Vectra]: Presented findings at fwd:cloudSec

8/18/22

[Vectra and AWS]: Meet to discuss findings. At this meeting it was understood that the CloudTrail S3 data-plane scoping filter is the mechanism which controls whether a PutObject event is written or not to the source account.

[1] https://docs.aws.amazon.com/awscloudtrail/latest/userguide/awscloudtrail-ug.pdf