Eight minutes is all it took. From exposed cloud assets to full administrative control in AWS, the attack documented by Sysdig shows how quickly a cloud environment can be compromised when automation, identity abuse, and permissive cloud controls converge.

There were no zero-days involved, no malware or novel exploit chain. The attacker relied entirely on valid credentials, native AWS services, and automated decision-making to move from initial access to administrator privileges at machine speed.

Over the past few weeks, we’ve looked at the early behavior of autonomous AI agents, how they began interacting and influencing each other inside shared environments, and how coordination quickly formed without humans in the loop.

This incident shows what happens when those dynamics are applied to a real cloud environment.

What we anticipated is now observable: Reconnaissance accelerates, and once an attacker controls an identity, they effectively control the environment. The result is not a new class of attack, but a dramatically faster one.

This breakdown walks through the intrusion step by step, highlighting where the attack accelerated, where defenders realistically could have intervened, and why identity-centric, behavioral detection is now the only viable way to stop cloud compromises that move at AI speed.

When Automation Collapses the Attack Timeline

This incident stands out because AI removed friction. The attacker did not probe cautiously or chain vulnerabilities together. Automation allowed them to enumerate services, evaluate privilege paths, and execute escalation faster than a human operator could match manually.

For defenders, most of the actions involved would look legitimate in isolation. API calls were authenticated, services were accessed through approved mechanisms and permissions were abused, not bypassed.

The only reliable signal was behavioral: Who was acting, how quickly they moved, and what sequence of actions unfolded across services.

High-Level Attack Flow

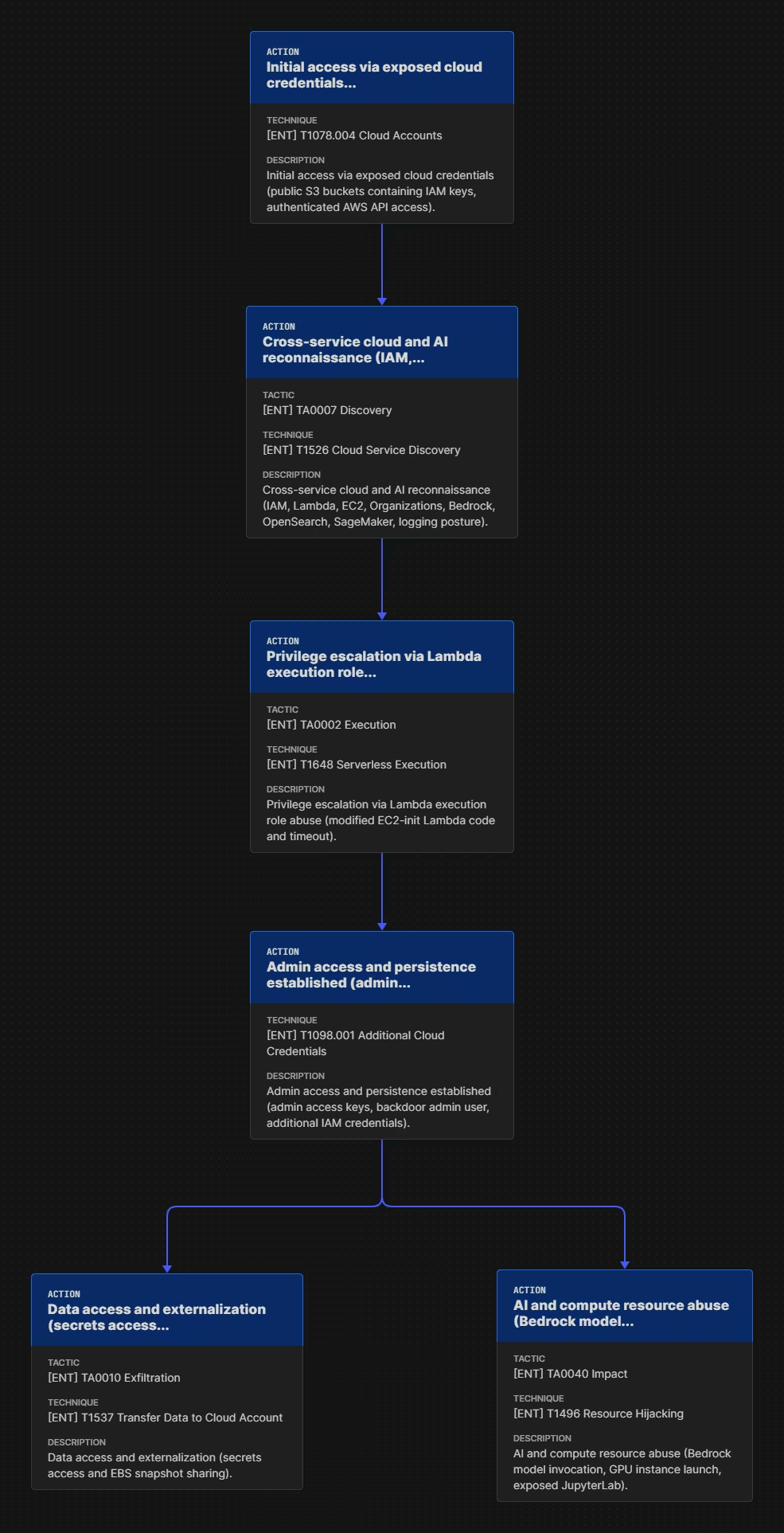

At a high level, the intrusion unfolded in five phases:

- Initial access through exposed assets

- Cross-service reconnaissance

- Privilege escalation via Lambda abuse

- Persistence and expansion

- Resource Abuse and Data Externalization

While the full sequence involved many individual steps, only a subset were critical to success. If those steps are detected or stopped, the attack fails entirely.

Phase 1: Initial Access Through Exposed Cloud Assets

What happened:

The attack began outside the AWS account and was not aimed at a specific organization.

The attacker searched for publicly accessible S3 buckets using naming conventions commonly associated with AI tooling and cloud automation. Any AWS environment following those conventions was a potential entry point.

Inside one bucket, the attacker found files containing IAM access keys. With those valid credentials, they authenticated directly into the victim AWS account.

From AWS’s perspective, a valid identity had logged in successfully.

Why this matters:

This is where many cloud incidents quietly begin. Cloud security posture issues often create the opening, but identity misuse determines how far an attacker can go.

Once authenticated, the attacker moved immediately into the next phase of the attack.

Phase 2: Cross-Service Reconnaissance at Machine Speed

What happened:

After authentication, the attacker performed broad reconnaissance across multiple AWS services including S3, Lambda, EC2, IAM, Bedrock, and CloudTrail.

The activity was fast, systematic and automated. API responses were ingested and evaluated in real time to identify viable escalation paths.

Why this matters:

Reconnaissance enables everything that follows. Without visibility into services, roles, and trust, escalation paths remain hidden.

This is where automation changes the equation. AI-assisted tooling allows attackers to ingest API responses, evaluate permissions, and identify viable paths in seconds.

For SOC teams, this phase represents the earliest realistic opportunity to intervene. Once privilege escalation begins, response windows shrink dramatically.

This behavior aligns closely with techniques documented in MITRE ATLAS, around valid account abuse and cloud service discovery. Rather than inventing new techniques, the attacker accelerated known behaviors using automation.

Phase 3: Lambda Abuse as the Primary Choke Point

What happened:

The attacker focused on an existing AWS Lambda function and used it as a privilege escalation mechanism.

First, they altered the function’s code and increased its execution timeout. This Lambda had an execution role with sufficient permissions to create IAM users and access keys.

Next, they invoked the modified function. When it executed, the function created new IAM access keys with administrative privileges.

Each step was legitimate on its own, but taken together, they turned a routine automation component into an escalation path.

Why this matters:

This sequence was the point where the attack became irreversible.

If any part of it fails, the chain breaks. If the function cannot be modified, it cannot be abused. If it is never executed, no new credentials are created. If it executes but cannot create admin access keys, the attacker stalls.

Lambda functions concentrate automation and privilege in a way few other services do. Execution roles are often broader than intended. Code changes are infrequent and lightly scrutinized. Invocation is expected and common.

Nothing about this sequence violates AWS policy or triggers a clear control failure. The risk only becomes visible when you look at how the function’s behavior changed and what it enabled immediately afterward.

This is a recurring pattern in modern cloud attacks. Attackers do not need to exploit infrastructure. They repurpose it.

Phase 4: Programmatic Privilege Escalation

What happened:

Using the modified Lambda function, the attacker created new IAM access keys with administrative privileges.

This was privilege escalation without exploitation.

The attacker simply used an execution role to perform actions it was allowed to perform, but not in a way its creators intended.

From that moment forward, the attacker effectively owned the account.

Why this matters:

For defenders, this is where traditional security controls often fail. Identity and access management systems enforce policy, not intent.

Once admin privileges exist, most controls step aside. Once administrative access exists, the scope of response narrows and remediation becomes significantly more complex.

Phase 5: Persistence and Expansion

What happened:

With administrative access secured, the attacker focused on persistence.

Specifically, they created a new IAM user and attached the AdministratorAccess policy, generated additional access keys for existing users, and retrieved secrets from Secrets Manager and SSM Parameter Store.

Each action expanded the attacker’s foothold and reduced the effectiveness of remediation. Even if one credential was revoked, others would remain.

Why this matters:

This phase reflects a shift from access to assurance. The attacker was ensuring continued control even if defenders responded.

Again, these actions were performed through legitimate APIs using valid permissions. The difference between maintenance and compromise lies entirely in behavior and timing.

Phase 6: Resource Abuse and Data Externalization

What happened:

The attacker moved to impact.

They launched a high-end GPU instance with an open security group and exposed JupyterLab interface.

They invoked Bedrock models and shared an EBS snapshot externally.

Why this matters:

At this point, the compromise had already succeeded. These actions signal clear attacker intent: resource abuse, potential data exfiltration, and monetization.

This stage aligns with patterns discussed in our podcast episode on how attackers target AWS Bedrock, including LLMjacking, cost abuse, and data exposure once cloud identities are compromised.

“What If..?”

The attack documented stopped where it did because it was observed, not because the attacker ran out of options.

With sustained admin access, the attacker could have:

- Established long-term persistence through trust policies and cross-account roles

- Modified CI/CD pipelines to inject malicious code into production

- Created shadow infrastructure that mirrored legitimate workloads

- Performed silent, incremental data exfiltration using snapshots and replication

- Used AI services continuously, blending cost abuse into normal usage

- Pivoted into connected AWS accounts or SaaS platforms

None of these actions require additional exploits. They require time. Eight minutes was just the entry point.

The Critical Need for Post-Compromise Detection

By the time impact occurs, prevention has already failed.

The defensive value shifts earlier in the attack chain, before privilege escalation and persistence lock in attacker control.

While the full attack chain is long, defenders can focus on a reduced set of critical steps along the attack flow:

- Broad, rapid cross-service reconnaissance

- Modification of an existing Lambda function

- Programmatic creation of admin access

- Establishment of persistent IAM identities

- Externalization of data through cloud-native mechanisms

These are behaviors that deviate sharply from normal usage patterns when viewed in sequence.

The challenge is correlation.

Most cloud security controls are static by design and struggle with intent.

In this incident:

- Credentials were valid

- APIs were used as designed

- Permissions existed legitimately

The only abnormality was behavior over time.

This is the detection gap that fast-moving, AI-assisted attacks exploit.

Why Identity-Centric, Behavioral Detection Is Required

When attacks move at machine speed, detection must operate at the same level.

This requires understanding:

- How human, machine, and agentic identities normally behave

- How services are usually accessed

- What sequences of actions are typical or rare

- How behavior changes when control shifts to an attacker

Identity-centric detection treats cloud identities, human, non-human and agentic, as the primary signal. Behavioral AI models how those identities operate across services and environments.

When reconnaissance accelerates, when Lambda behavior changes, when privilege is granted unexpectedly, those shifts are detected in real time.

This is the only practical way to interrupt attacks before privilege escalation becomes irreversible.

How Vectra AI Addresses This Class of Attack

The Vectra AI Platform is designed for exactly this problem space.

By analyzing identity behavior across cloud control planes, network traffic, SaaS activity, and cloud workloads, Vectra AI detects the early stages of attacks that rely on valid access and automation.

Instead of waiting for impact, it focuses on:

- Reconnaissance patterns

- Privilege abuse

- Lateral movement

- Data misuse

When admin access can be compromised in minutes, automated response is not optional. It is the only way to act inside the shrinking window between access and escalation.

This incident should reset expectations.

Eight minutes is no longer exceptional. It is what automation enables when identity is abused and defenses rely on static assumptions. The lesson is not to fear AI. It is to recognize that attackers are already using it to compress timelines, remove hesitation, and scale decision-making.

Defenders must respond in kind: detection has to be behavioral, coverage has to be identity-centric and response has to be automated.

Because when cloud admin access falls at AI speed, there is no time left to piece together alerts after the fact.