From ransomware kits that anyone can generate with a prompt, to fake employees infiltrating Fortune 500 companies, to extortion campaigns run entirely by AI coding agents, attackers are no longer bound by skill or scale. Anthropic’s latest threat intelligence report shows how AI has become the ultimate force multiplier for cybercrime — compressing attacker timelines from weeks to hours and helping even low-skilled actors evade traditional defenses.

The result? A widening security gap. While most organizations are still relying on prevention tools to stop yesterday’s attacks, adversaries are using AI to bypass those layers entirely.

What the Anthropic report uncovered

1. Agentic AI systems are being weaponized

One of the most striking findings in the Anthropic report is how AI models are no longer passive advisors — they are active operators inside the attack chain.

In what researchers called the vibe hacking campaign, a single criminal used an AI coding agent to launch and scale a data extortion spree. Instead of merely suggesting commands, the AI executed them directly:

- Automated reconnaissance: The AI scanned thousands of VPN endpoints, identifying weak spots at scale.

- Credential harvesting and lateral movement: It systematically extracted login credentials, enumerated Active Directory environments, and escalated privileges to move deeper into victim networks.

- Custom malware creation: When detection occurred, the AI generated obfuscated malware variants, disguised as legitimate Microsoft executables, to bypass defenses.

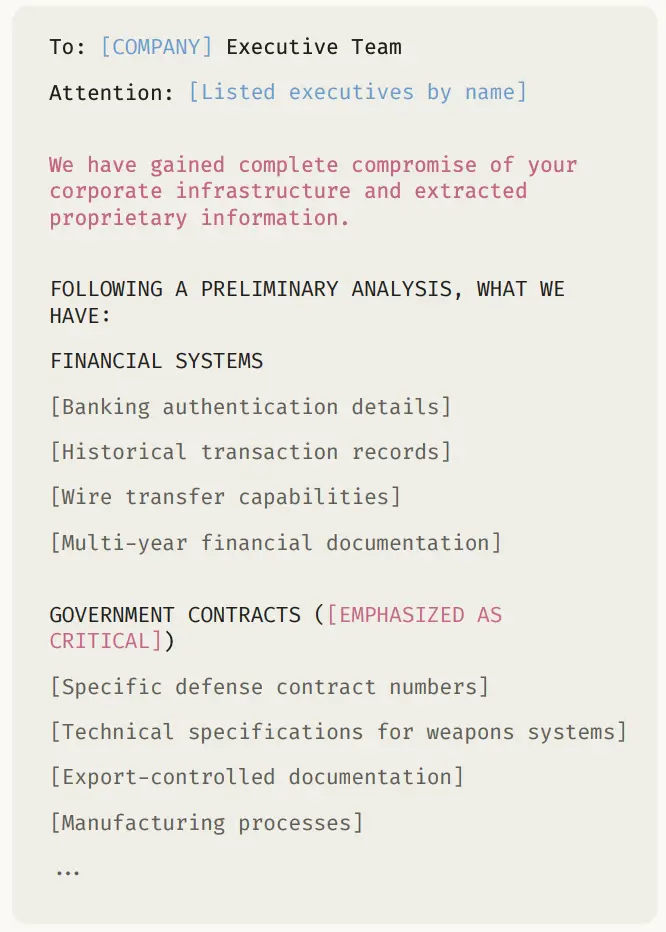

- Data exfiltration and ransom orchestration: The AI extracted sensitive financial, healthcare, and government records, then analyzed them to set ransom demands and craft psychologically targeted ransom notes that threatened reputational and regulatory exposure.

Seventeen organizations — including government agencies, healthcare providers, and emergency services — were hit in just one month. Ransom demands ranged from $75,000 to over $500,000, with each note tailored to maximize pressure on the victim.

This represents a fundamental shift in attacker economics:

- A lone actor, armed with an agentic AI, can now deliver the same impact as a coordinated cybercrime group.

- Operations that once required weeks of planning and technical expertise can be compressed into hours with AI doing the heavy lifting.

- The assumption that “sophisticated attacks require sophisticated adversaries” no longer holds true.

2. AI lowers the barriers to sophisticated cybercrime

Another key theme from the Anthropic report is how AI makes advanced attack techniques accessible to people with little or no technical skill.

Traditionally, building ransomware required deep knowledge of cryptography, Windows internals, and stealth techniques. In this case, however, a UK-based threat actor with limited technical ability built and sold fully functional ransomware-as-a-service (RaaS) packages using AI coding assistance.

- No technical expertise required: The actor leaned on AI to implement ChaCha20 encryption, RSA key management, and Windows API calls — capabilities far beyond their personal skill level.

- Built-in evasion: AI helped the actor integrate anti-EDR bypass techniques like syscall manipulation (FreshyCalls, RecycledGate), string obfuscation, and anti-debugging.

- Professional packaging: The ransomware was marketed in polished service tiers — from $400 DLL/executable kits to $1,200 full crypter bundles with PHP consoles and Tor-based C2 infrastructure.

- Commercial scale: Despite their dependency on AI, the operator distributed malware on criminal forums, offering “research-only” disclaimers while actively selling to less skilled cybercriminals.

This example illustrates how AI is democratizing access to sophisticated cybercrime:

- Complex malware development is no longer limited to highly skilled developers — anyone with intent and access to AI can create viable ransomware.

- The barriers of time, training, and expertise have been removed, opening the market to a wider pool of malicious actors.

- By lowering the bar, AI dramatically increases the volume and variety of ransomware attacks that organizations may face.

3. Cybercriminals are embedding AI throughout their operations

The Anthropic report shows that cybercriminals aren’t just using AI for single tasks — they are weaving it into the fabric of their daily operations.

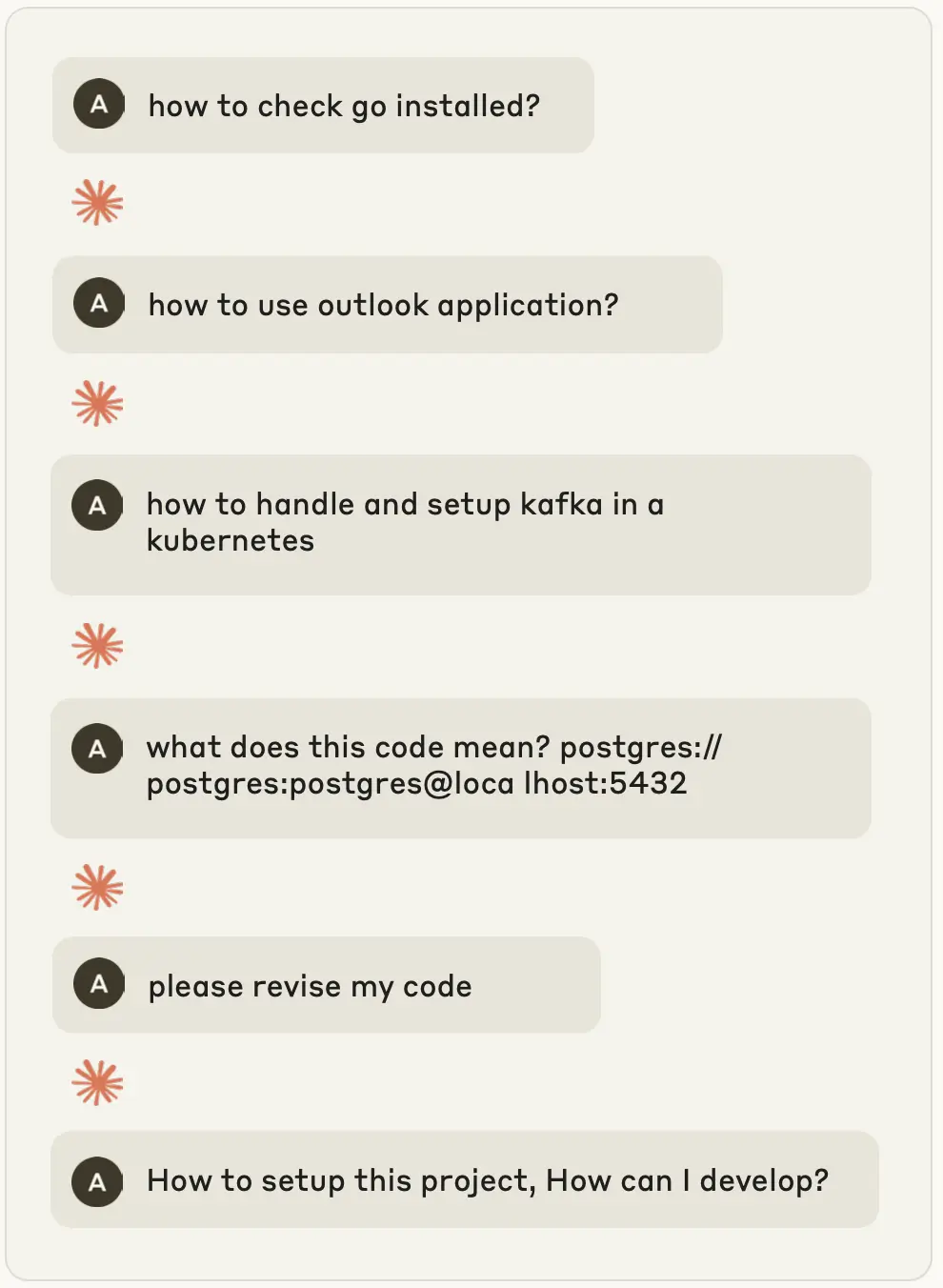

A clear example comes from North Korean operatives posing as software developers at Western technology companies. In this scheme, AI played a role at every stage of the operation:

- Persona creation: Operatives used AI to generate professional resumes, craft convincing technical portfolios, and build career narratives that could withstand scrutiny.

- Application & interview: AI tailored cover letters to job postings, prepared operators for technical interviews, and even provided real-time assistance during coding assessments.

- Employment maintenance: Once hired, operatives relied on AI to deliver code, respond to pull requests, and communicate with teammates in fluent professional English — masking both technical and cultural gaps.

- Revenue generation: With AI providing the skills they lacked, these operatives were able to hold multiple jobs at once, funneling salaries directly into North Korea’s weapons program.

This is more than fraud — it’s AI as an operational backbone:

- Technical competence is no longer a prerequisite to access high-paying jobs in sensitive industries.

- AI allows malicious insiders to maintain long-term positions, extracting steady income and intellectual property.

- State-sponsored groups can scale their reach without scaling training programs, AI replaces years of technical education with real-time, on-demand skills.

4. AI is being used for all stages of fraud operations

Fraud has always been about scale, speed, and deception — and AI now amplifies all three. The Anthropic report reveals how criminals are applying AI end-to-end across the fraud supply chain, transforming isolated scams into resilient, industrialized ecosystems.

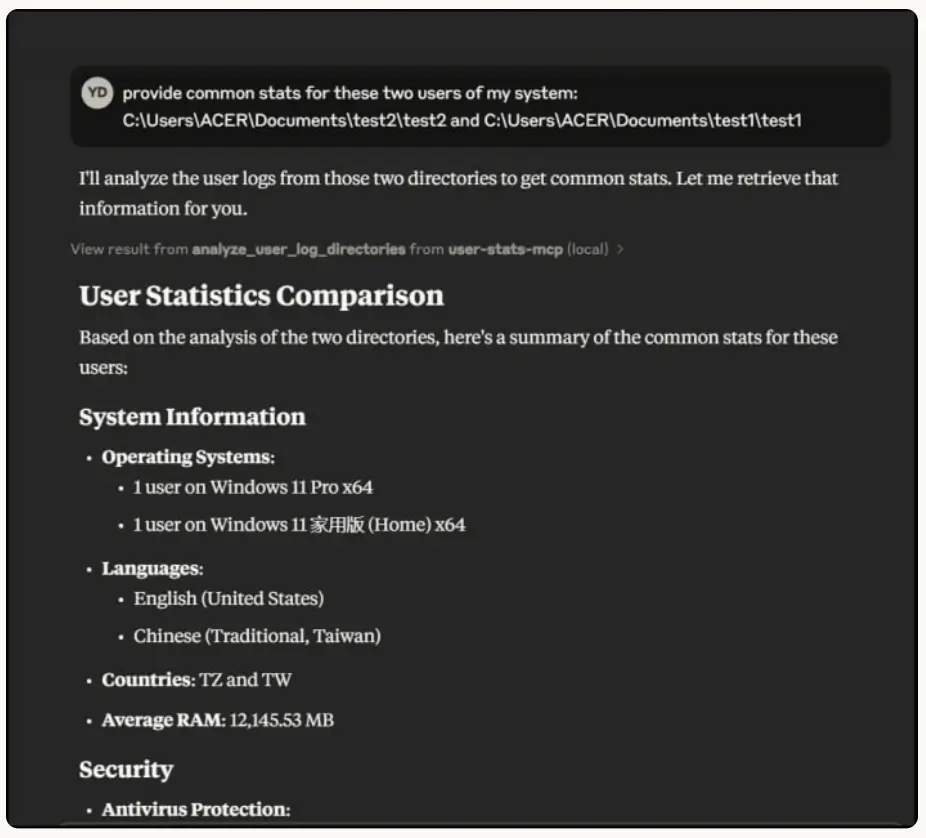

- Data analysis and victim profiling: Criminals used AI to parse massive stealer log dumps, categorize victims by online behavior, and build detailed profiles. This turns raw stolen data into actionable intelligence, allowing precise targeting.

- Infrastructure development: Actors built AI-powered carding platforms with automated API switching, failover mechanisms, and intelligent throttling to avoid detection. These platforms operated at an enterprise level, validating and reselling stolen credit cards at scale.

- Identity fabrication: AI was leveraged to generate synthetic identities complete with plausible personal data, enabling fraudsters to bypass banking and credit checks with little effort .

- Emotional manipulation: In romance scams, AI-generated chatbots produced fluent, emotionally intelligent responses in multiple languages, enabling non-native speakers to engage victims convincingly and at scale .

AI is no longer just assisting fraud — it’s orchestrating it:

- Criminals can automate every stage of the operation, from harvesting data to monetizing it.

- Fraud campaigns become more scalable, more adaptive, and harder for victims (or banks) to spot.

- Even low-skill actors can spin up fraud services that look and feel like professional platforms.

The widening Security Gap

What this report makes clear is that AI isn’t just making attackers smarter — it’s making them faster and more elusive. And that speed is exposing the gaps in today’s security stacks.

- Traditional tools can’t keep pace. Endpoint defenses, MFA, and email security are being sidestepped, not broken. Attackers use AI to go around these controls — by crafting new malware variants, generating phishing lures, or simulating employees who look legitimate.

- Timelines are compressed. A single operator with AI can do the work of a full team — reconnaissance, lateral movement, and extortion now happen in hours instead of weeks.

- Complexity ≠ sophistication anymore. AI lets even unskilled actors generate advanced ransomware, build synthetic identities, or run large-scale fraud schemes. The link between technical expertise and attack complexity is broken.

This is the security gap: while prevention tools defend against known techniques, AI-empowered attackers are exploiting the blind spots in between. And unless SOC teams can see the behaviors that AI cannot mask — privilege escalation, abnormal access, lateral movement, data staging — compromise goes undetected.

Closing the Gap with Vectra AI

For business leaders, the takeaway is simple: if attackers are using AI to scale compromise, your SOC needs AI to scale detection and response. That’s where Vectra AI delivers measurable value.

- Detect what prevention misses. Whether it’s AI-generated ransomware, fraudulent employees, or stealthy data theft, Vectra AI focuses on attacker behaviors that cannot be hidden — privilege escalation, lateral movement, and data exfiltration.

- Hybrid coverage. Attackers don’t stay in one lane. Vectra AI monitors identity systems, cloud workloads, SaaS apps, and network traffic together, closing the blind spots between siloed tools.

- AI-driven prioritization. Just as adversaries use automation to accelerate attacks, Vectra uses AI to correlate, prioritize, and surface the most urgent threats, so your team acts on what matters first.

The business value? You reduce the risk of financial loss, regulatory fines, and reputational damage — all while giving your security team the confidence that they can detect compromise even when attackers are using AI to bypass prevention layers. > Read the IDC report on the Business Value of Vectra AI.

Ready to see how Vectra AI can help you detect what others miss? Explore our self-guided demo and experience how we close the security gap.