For many security leaders, the most dangerous attacker isn’t on the outside, they’re already inside. Insider threats, whether from malicious employees or rogue admins, have already cleared the hardest hurdle in an attack: gaining access, often privileged access. That means they can operate with trusted credentials, existing permissions, and intimate knowledge of your systems and data.

This is why insider threats are often harder to detect and contain. According to IBM’s Cost of a Data Breach 2025 report, malicious insider attacks cost an average of $4.92 million and take 260 days to resolve, making them among the costliest and longest-lived incidents security leaders face.

Across active Vectra AI customer deployments, nearly 4 in 10 of the prioritized threats were insider threats within a one-month period. This isn’t rare. It’s happening now in real-world enterprise environments, and it’s happening often.

What Are the Gaps in Insider Threat Programs?

Even the most mature security programs often depend on a familiar trio of tools for insider threats risk, but each has a blind spot that attackers exploit:

- DLP - Flags or blocks certain data transfers, but can’t tell intent. If an insider downloads sensitive files they’re allowed to access, DLP sees it as normal business activity.

- EDR - Excellent at spotting malware and endpoint exploits, but blind to account misuse in SaaS, cloud, and identity layers where many insider incidents unfold.

- SIEM - Aggregates logs for investigation, but doesn’t connect the dots between subtle, separate actions across different systems without heavy manual correlation.

These gaps exist because insiders operate with legitimate permissions. Their actions blend into routine workflows, bypassing prevention-focused controls. Without correlating behaviors across identities, cloud, and on prem network, the signs remain scattered and unrecognized.

The result? By the time an insider threat becomes obvious to these tools, sensitive data has already left or damage has already been done.

How Does Generative AI Increase Insider Threat Risks?

The rise of Generative AI in the workplace, particularly tools like Copilot for Microsoft 365, brings a new dimension to insider threats.

If a malicious insider or compromised account has Copilot access, they can use its unified AI search to query across Word, SharePoint, Teams, Exchange, and other Microsoft surfaces in seconds. Sensitive data that might have taken hours or days to locate manually can be discovered and staged for exfiltration almost instantly.

Why This Matters For Insider Threats:

- Accelerated reconnaissance. Copilot removes latency from the discovery phase. An insider can surface credentials, financial data, or intellectual property at the speed of AI.

- Living-off-the-land advantage. Attackers use existing permissions and trusted enterprise AI tools, blending seamlessly with legitimate user activity.

- Limited native visibility. Security teams see minimal detail from Copilot audit logs, making it difficult to know what was searched for or returned.

- Insufficient native controls. Built-in Copilot restrictions do little to stop determined insiders from probing the environment for sensitive information, and native tools do not detect when attackers compromise an identity to abuse legitimate usage of Copilot for M365.

Why Are Insider Threats So Hard to Detect?

Normal and malicious behaviors are nearly indistinguishable without context:

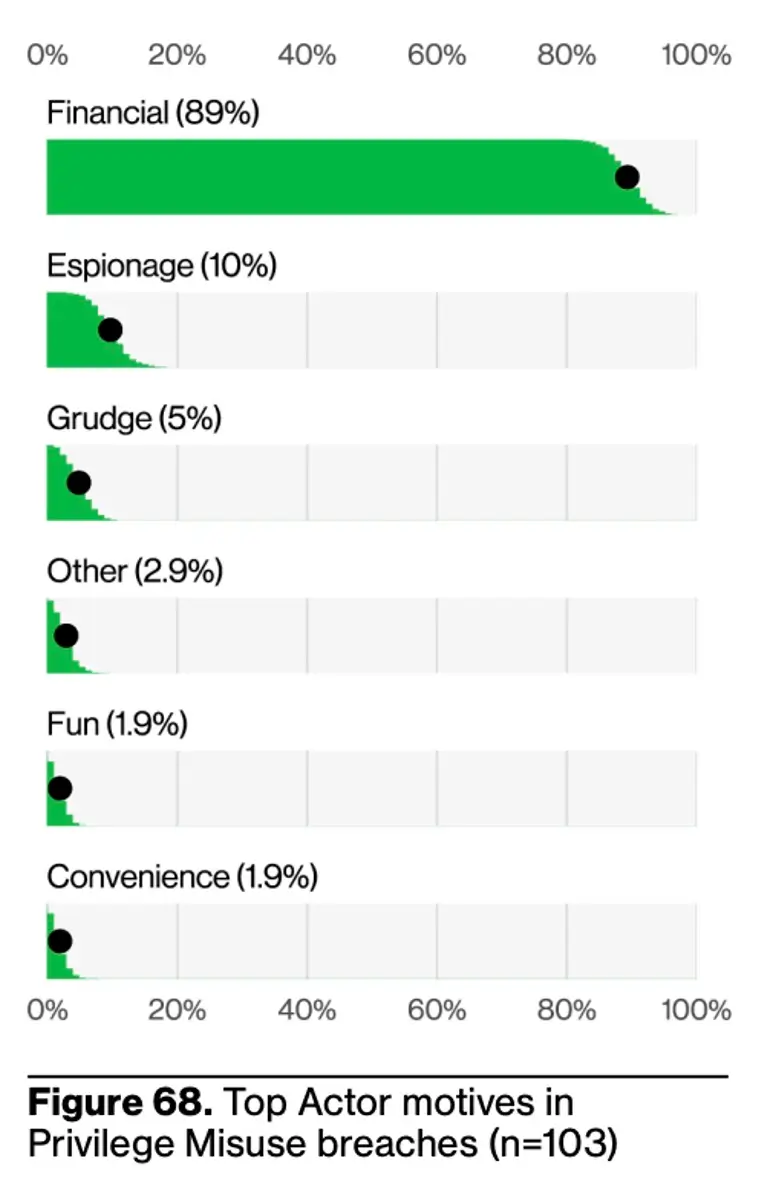

The challenge is compounded by motivation and opportunity. The vast majority of insider incidents are financially driven according to Verizon 2025 Data Breach Investigation Report, with rogue admins representing the highest-impact risk. In cloud-hosted environments, sensitive data can be identified and stolen in seconds, well before most security teams have time to react.

According to Verizon, 90% of top actors in privilege misuse breaches are internal, with 10% being partners. Financial incentive remains the number one motive.

The key is to understand how access is being used — the sequence, context, and intent behind each action — not merely that access occurred. Detecting real insider threats requires behavioral-based AI that can distinguish malicious intent from everyday anomalies.

Three Strategic Priorities to Strengthen Insider Threat Programs

1. Extend visibility to identity behaviors across all environments

Insiders operate across Active Directory, M365, SharePoint, Teams, Exchange, Entra ID, Copilot for M365, Cloud and network resources. Without correlation, each action appears benign in isolation. Full-surface identity visibility is essential.

2. Detect malicious intent earlier in the kill chain

Privilege escalation, unusual data staging, and persistence mechanisms often occur days or weeks before exfiltration. Early detection at this stage dramatically reduces impact and response costs.

3. Correlate and consolidate detections for decisive response

Security teams can’t investigate every anomaly. Behavioral monitoring distills thousands of low-level events into a handful of high-confidence cases with full context, enabling rapid and confident action.

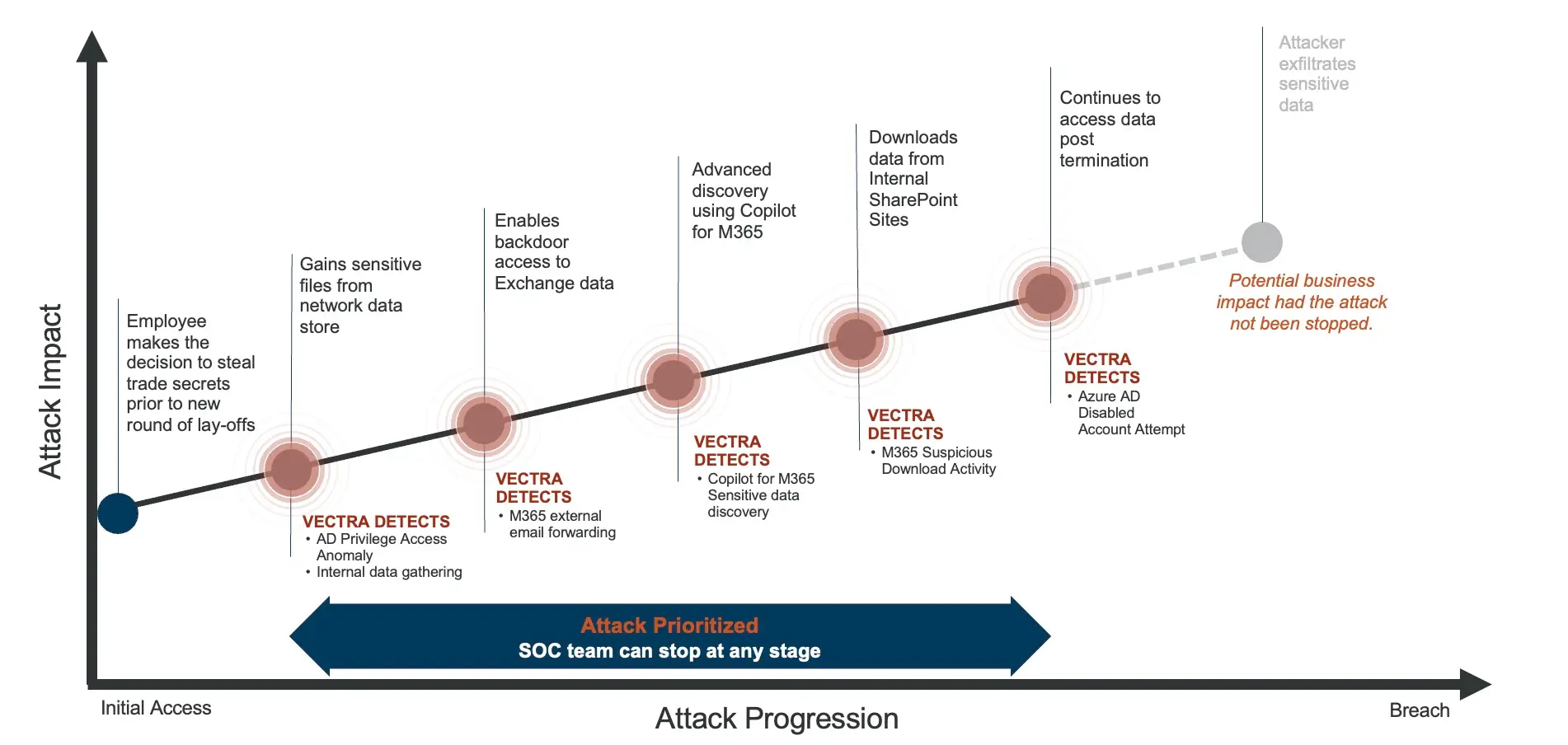

A Real-World Case: How Vectra AI Stopped an Insider Threat

In one industrial manufacturing enterprise, an IT admin who is aware of upcoming layoffs:

- Gathered sensitive files from network data stores.

- Forwarded Exchange email to an external account.

- Used Copilot for M365 to discover sensitive data

- Downloaded SharePoint data outside normal patterns.

- Attempted post-termination logins to disabled accounts.

Traditional controls didn’t connect these actions in time. Vectra AI did — correlating each step into a single, high-confidence case, enabling swift containment and supporting legal action.

The Vectra AI Difference for Insider Threats

- Proven risk reduction. Detects and stops insider threats earlier, minimizing financial and operational impact.

- Behavioral-based AI Detection. Adds the behavioral layer missing from prevention-heavy programs.

- Multi-surface visibility. Identity, cloud, Gen AI and network, in one correlated view.

- Noise reduction at scale. 99.98% of detections filtered before reaching analysts according to Vectra AI Research.

- Enables rapid investigation containment. From automated account lockdowns to full metadata context, to stop abuse before data leaves and to understand which files were accessed and the full scope of the attack.

- Integrates with existing tools. Complements and strengthens DLP, EDR, and SIEM to cut insider threat risk without changing your existing workflow.

Insider threats are not just a compliance concern — they are a material business risk. Addressing them requires evolving beyond content inspection to identity-based behavioral detection.

The insider is already inside. The question is: will you see what they’re doing in time to stop them?

Take the Next Step in Insider Threat Protection

Insider threats demand more than prevention controls, they require behavioral-based AI detection that can see malicious intent in real time, across every identity and environment. Ready to strengthen your insider threat detection program? See Vectra AI Platform in action: